UniRestore: Unified Perceptual and Task-Oriented Image Restoration Model Using Diffusion Prior.

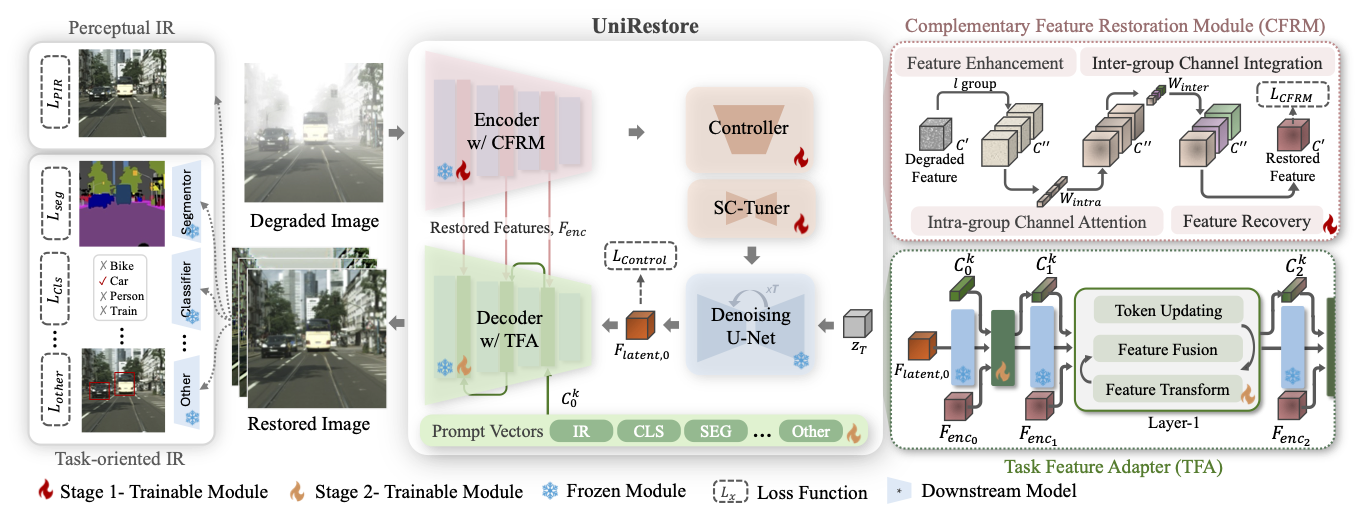

UniRestore leverages diffusion prior to unify Perceptual Image Restoration (PIR) and Task-oriented Image Restoration (TIR), achieving both high visual fidelity and task utility. PIR enhances visual clarity, but its outputs may not benefit recognition tasks. In contrast, TIR optimizes features for tasks like classification or segmentation, but often compromises visual appeal.

Abstract

Image restoration aims to recover content from inputs degraded by various factors, such as adverse weather, blur, and noise. Perceptual Image Restoration (PIR) methods improve visual quality but often do not support downstream tasks effectively. On the other hand, Task-oriented Image Restoration (TIR) methods focus on enhancing image utility for high-level vision tasks, sometimes compromising visual quality. This paper introduces UniRestore, a unified image restoration model that bridges the gap between PIR and TIR by using a diffusion prior. The diffusion prior is designed to generate images that align with human visual quality preferences, but these images are often unsuitable for TIR scenarios.

To solve this limitation, UniRestore utilizes encoder features from an autoencoder to adapt the diffusion prior to specific tasks. We propose a Complementary Feature Restoration Module (CFRM) to reconstruct degraded encoder features and a Task Feature Adapter (TFA) module to facilitate adaptive feature fusion in the decoder. This design allows UniRestore to optimize images for both human perception and downstream task requirements, addressing discrepancies between visual quality and functional needs. Integrating these modules also enhances UniRestore’s adaptability and efficiency across diverse tasks. Extensive experiments demonstrate the superior performance of UniRestore in both PIR and TIR scenarios.

Architecture of UniRestore

The main contributions of UniRestore are the Complementary Feature Restoration Module (CFRM) and the Task Feature Adapter (TFA) modules, which utilize task-specific prompts to effectively adapt diffusion priors for both perceptual and task-oriented image restoration, enabling unified and extensible performance across diverse downstream tasks.

UniRestore bridges perceptual and task-oriented image restoration by incorporating two essential modules. The CFRM is designed to restore clean features from degraded inputs, guiding the denoising process with enhanced representations. To adapt the diffusion prior for diverse downstream tasks, we introduce the TFA, which dynamically fuses restored and generated features through lightweight task-specific prompts. By enabling unified modeling and efficient task extensibility, UniRestore achieves high visual fidelity while preserving strong task performance across various real-world scenarios.

Proposed Modules

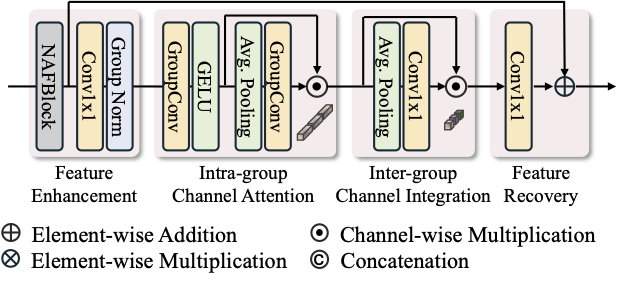

Complementary Feature Restoration Module (CFRM) is designed to restore clean and informative features from degraded inputs within the encoder. It enhances feature quality through a four-step process: feature enhancement, intra-group channel attention, inter-group channel integration, and feature recovery. By modeling diverse degradation patterns and emphasizing task-relevant information, CFRM provides complementary representations that guide the decoder for high-quality image restoration and improved downstream performance.

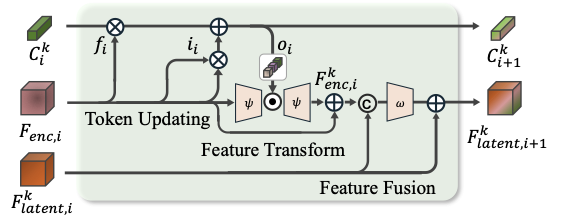

Task Feature Adapter (TFA) adapts restored encoder features for diverse recognition tasks by integrating them with diffusion features in the decoder. Instead of using separate adapters per task, TFA employs lightweight, learnable prompts that guide feature fusion at each layer. These prompts are dynamically updated, allowing efficient task adaptation without retraining the entire model. This design ensures scalability and enables seamless extension to new tasks with minimal overhead.

Moreover, introducing additional tasks is simple and efficient—only a new task-specific prompt needs to be added and trained, without modifying the main model or accessing previous data, enabling scalable multi-task extension.

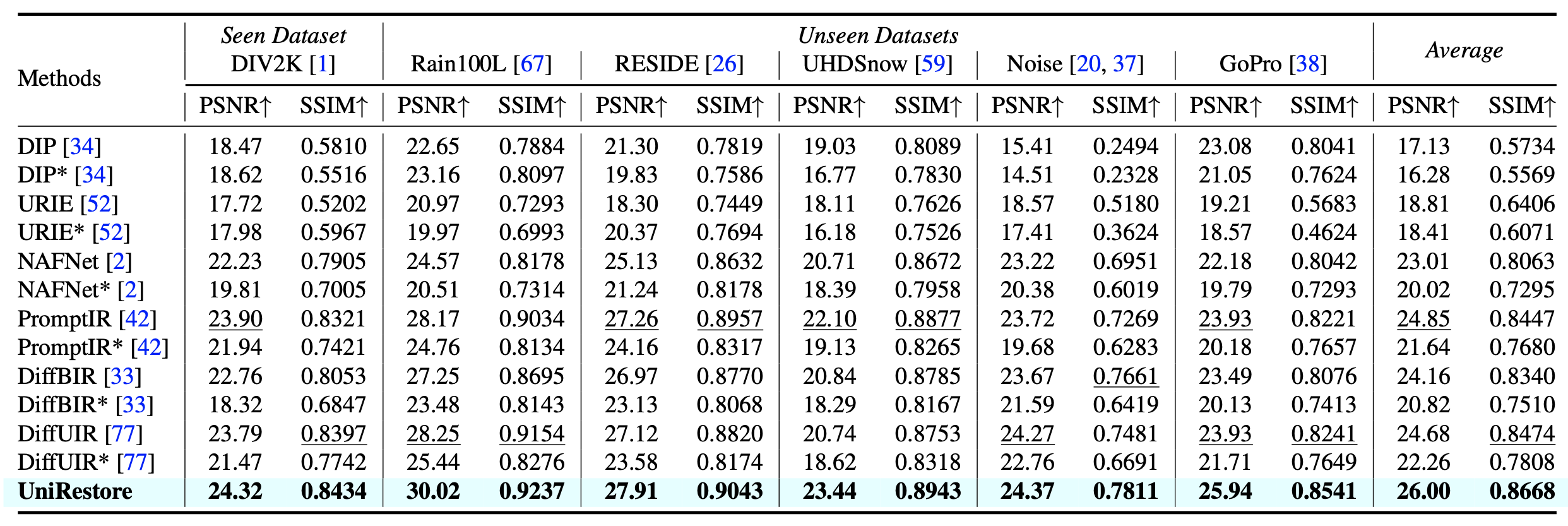

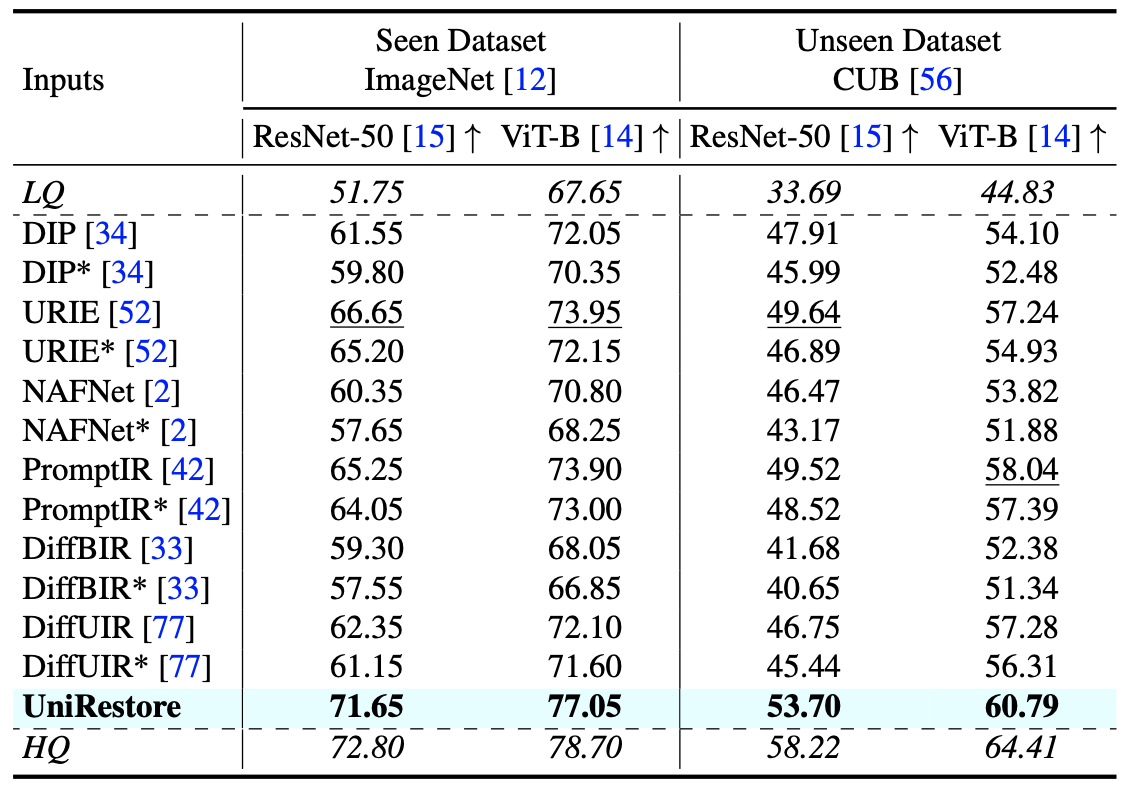

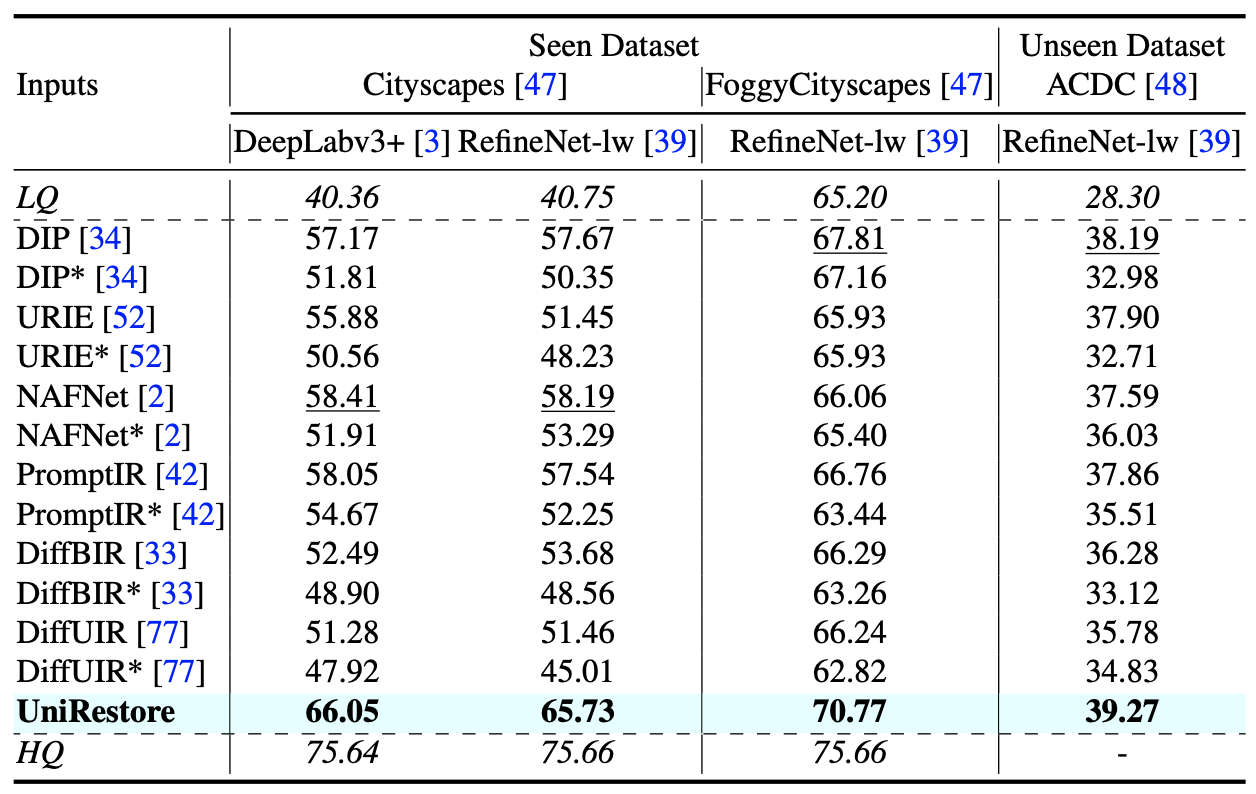

Quantitative Results

Quantitative results demonstrate that while PIR- and TIR-based methods excel in their respective tasks, they struggle to generalize beyond their original objectives. In contrast, UniRestore consistently achieves strong performance across perceptual restoration, classification, and segmentation using a single unified model. It not only outperforms existing baselines in multi-task settings but also generalizes effectively to unseen datasets and unknown downstream models, highlighting its robustness and scalability.

Methods marked with "*" indicate models trained under the UniRestore multi-task setting; others are trained using their original objective.

Qualitative Results

UniRestore effectively removes degradation patterns and reconstructs finer details, leading to improved visual quality in PIR task. Moreover, for TIR task, UniRestore emphasizes high-frequency information, which improves activation map alignment and enables more accurate object boundaries, demonstrating enhanced spatial consistency and stronger overall task performance.

BibTeX

@inproceedings{chen2025unirestore,

title={UniRestore: Unified Perceptual and Task-Oriented Image Restoration Model Using Diffusion Prior},

author={Chen, I and Chen, Wei-Ting and Liu, Yu-Wei and Chiang, Yuan-Chun and Kuo, Sy-Yen and Yang, Ming-Hsuan and others},

booktitle={Proceedings of the Computer Vision and Pattern Recognition Conference},

pages={17969--17979},

year={2025}

}